Google’s QUIC protocol: moving the web from TCP to UDP

Mattias Geniar, Saturday, July 30, 2016 - last modified: Sunday, December 4, 2016

The QUIC protocol (Quick UDP Internet

Connections) is an entirely new protocol for the web developed on top of

UDP instead of TCP.

Some are even (jokingly) calling it TCP/2.

I only learned about QUIC a few weeks ago while doing the curl & libcurl episode of the SysCast podcast.The really interesting bit about the QUIC protocol is the move to UDP.

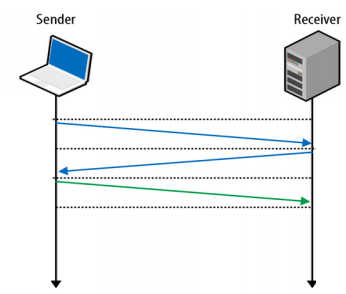

Now, the web is built on top of TCP for its reliability as a transmission protocol. To start a TCP connection a 3-way handshake is performed. This means additional round-trips (network packets being sent back and forth) for each starting connection which adds significant delays to any new connection.

(Source: Next generation multiplexed transport over UDP (PDF))

If on top of that you also need to negotiate TLS, to create a secure, encrypted, https connection, even more network packets have to be sent back and forth.

(Source: Next generation multiplexed transport over UDP (PDF))

Innovation like TCP Fast Open will improve the situation for TCP, but this isn't widely adopted yet.

UDP on the other hand is more of a fire and forget protocol. A message is sent over UDP and it's assumed to arrive at the destination. The benefit is less time spent on the network to validate packets, the downside is that in order to be reliable, something has to be built on top of UDP to confirm packet delivery.

That's where Google's QUIC protocol comes in.

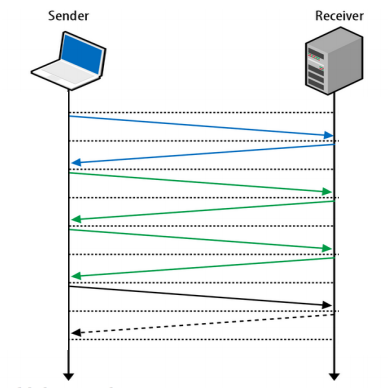

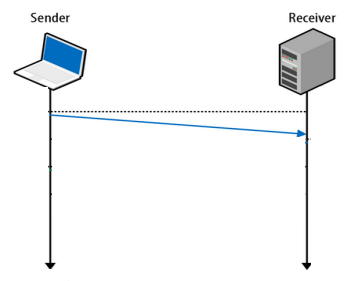

The QUIC protocol can start a connection and negotiate all the TLS (HTTPs) parameters in 1 or 2 packets (depends on if it's a new server you are connecting to or a known host).

(Source: Next generation multiplexed transport over UDP (PDF))

This can make a huge difference for the initial connection and start of download for a page.

Why is QUIC needed?

It's absolutely mind boggling what the team developing the QUIC protocol is doing. It wants to combine the speed and possibilities of the UDP protocol with the reliability of the TCP protocol.Wikipedia explains it fairly well.

As improving TCP is a long-term goal for Google, QUIC aims to be nearly equivalent to an independent TCP connection, but with much reduced latency and better SPDY-like stream-multiplexing support.There's a part of that quote that needs emphasising: if QUIC features prove effective, those features could migrate into a later version of TCP.

If QUIC features prove effective, those features could migrate into a later version of TCP and TLS (which have a notably longer deployment cycle).

QUIC

The TCP protocol is rather highly regulated. Its implementation is inside the Windows and Linux kernel, it's in each phone OS, ... it's pretty much in every low-level device. Improving on the way TCP works is going to be hard, as each of those TCP implementation needs to follow.

UDP on the other hand is relatively simple in design. It's faster to implement a new protocol on top of UDP to prove some of the theories Google has about TCP. That way, once they can confirm their theories about network congestion, stream blocking, ... they can begin their efforts to move the good parts of QUIC to the TCP protocol.

But altering the TCP stack requires work from the Linux kernel & Windows, intermediary middleboxes, users to update their stack, ... Doing the same thing in UDP is much more difficult for the developers making the protocol but allows them to iterate much faster and implement those theories in months instead of years or decades.

Where does QUIC fit in?

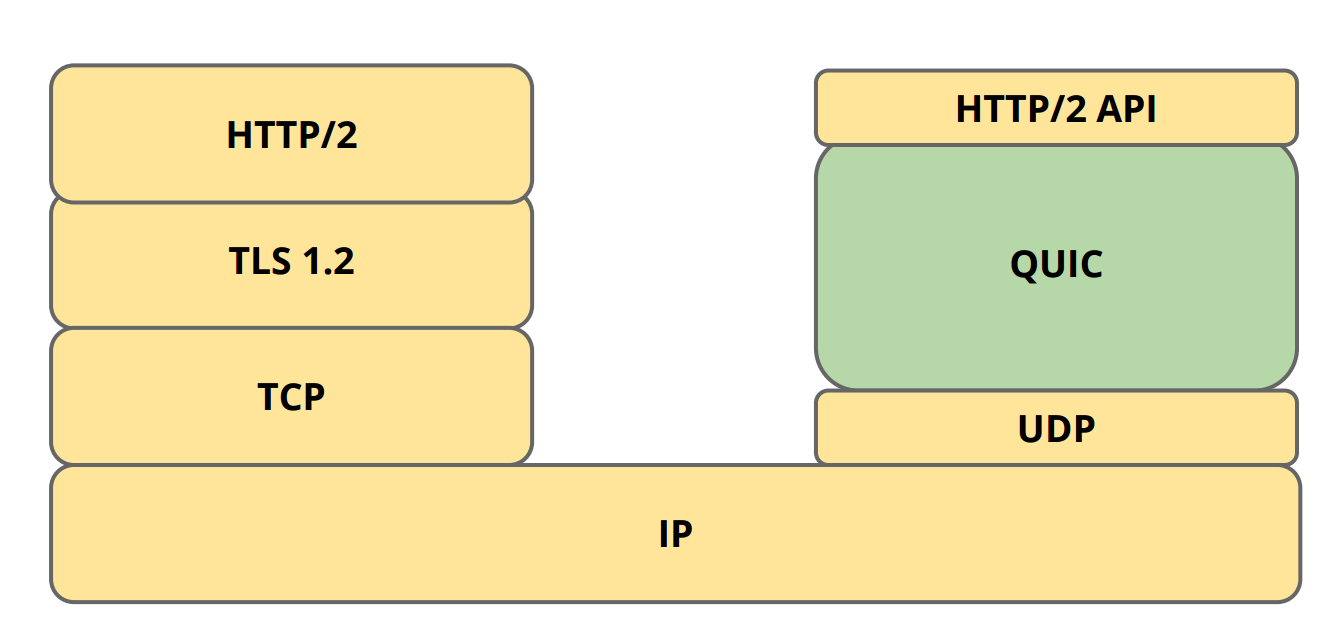

If you look at the layers which make up a modern HTTPs connection, QUIC replaces the TLS stack and parts of HTTP/2.The QUIC protocol implements its own crypto-layer so does not make use of the existing TLS 1.2.

It replaces TCP with UDP and on top of QUIC is a smaller HTTP/2 API used to communicate with remote servers. The reason it's smaller is because the multiplexing and connection management is already handled by QUIC. What's left is an interpretation of the HTTP protocol.

TCP head-of-line blocking

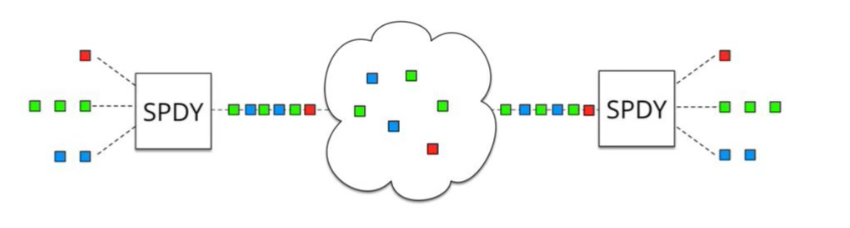

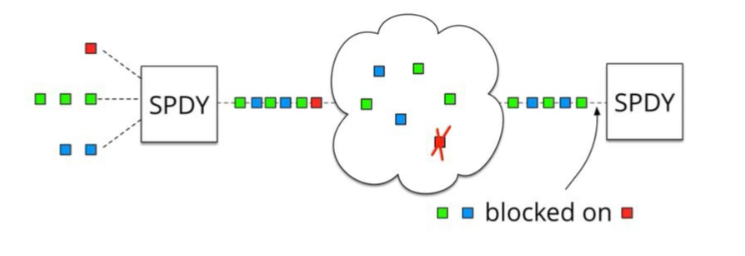

With SPDY and HTTP/2 we now have a single TCP connection being used to connect to a server instead of multiple connections for each asset on a page. That one TCP connection can independently request and receive resources.

(Source: QUIC: next generation multiplexed transport over UDP)

Now that everything depends on that single TCP connection, a downside is introduced: head-of-line blocking.

In TCP, packets need to

(Source: QUIC: next generation multiplexed transport over UDP)

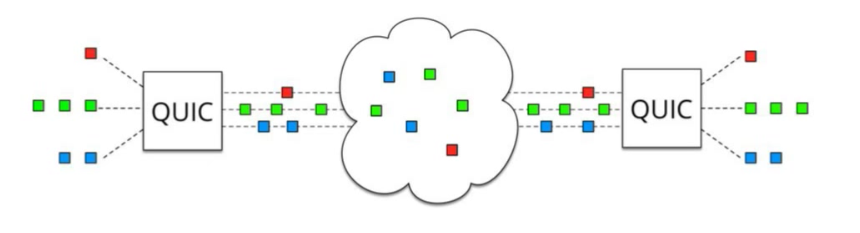

In QUIC, this is solved by not making use of TCP anymore.

UDP is not dependent on the order in which packets are received. While it's still possible for packets to get lost during transit, they will only impact an individual resource (as in: a single CSS/JS file) and not block the entire connection.

(Source: QUIC: next generation multiplexed transport over UDP)

QUIC is essentially combining the best parts of SPDY and HTTP2 (the multiplexing) on top of a non-blocking transportation protocol.

Why fewer packets matter so much

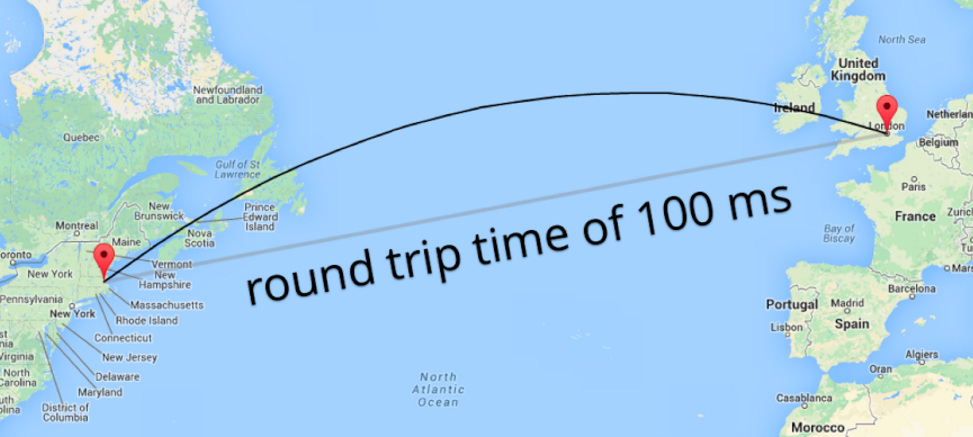

If you're lucky enough to be on a fast internet connection, you can have latencies between you and a remote server between the 10-50ms range. Every packet you send across the network will take that amount of time to be received.For latencies < 50ms, the benefit may not be immediately clear.

It's mostly noticeable when you are talking to a server on another continent or via a mobile carrier using Edge, 3G/4G/LTE. To reach a server from Europe in the US, you have to cross the Atlantic ocean. You immediately get a latency penalty of +100ms or higher purely because of the distance that needs to be traveled.

(Source: QUIC: next generation multiplexed transport over UDP)

Mobile networks have the same kind of latency: it's not unlikely to have a 100-150ms latency between your mobile phone and a remote server on a slow connection, merely because of the radio frequencies and intermediate networks that have to be traveled. In 4G/LTE situations, a 50ms latency is easier to get.

On mobile devices and for large-distance networks, the difference between sending/receiving 4 packets (TCP + TLS) and 1 packet (QUIC) can be up to 300ms of saved time for that initial connection.

Forward Error Correction: preventing failure

A nifty feature of QUIC is FEC or Forward Error Correction. Every packet that gets sent also includes enough data of the other packets so that a missing packet can be reconstructed without having to retransmit it.This is essentially RAID 5 on the network level.

Because of this, there is a trade-off: each UDP packet contains more payload than is strictly necessary, because it accounts for the potential of missed packets that can more easily be recreated this way.

The current ratio seems to be around 10 packets. So for every 10 UDP packets sent, there is enough data to reconstruct a missing packet. A 10% overhead, if you will.

Consider Forward Error Correction as a sacrifice in terms of "data per UDP packet" that can be sent, but the gain is not having to retransmit a lost packet, which would take a lot longer (recipient has to confirm a missing packet, request it again and await the response).

Session resumption & parallel downloads

Another exciting opportunity with the switch to UDP is the fact that you are no longer dependent on the source IP of the connection.In TCP, you need 4 parameters to make up a connection. The so-called quadruplets.

To start a new TCP connection, you need a source IP, source port, destination IP and destination port. On a Linux server, you can see those quadruplets using

netstat.$ netstat -anlp | grep ':443' ... tcp6 0 0 2a03:a800:a1:1952::f:443 2604:a580:2:1::7:57940 TIME_WAIT - tcp 0 0 31.193.180.217:443 81.82.98.95:59355 TIME_WAIT - ...If any of the parameters (source IP/port or destination IP/port) change, a new TCP connection needs to be made.

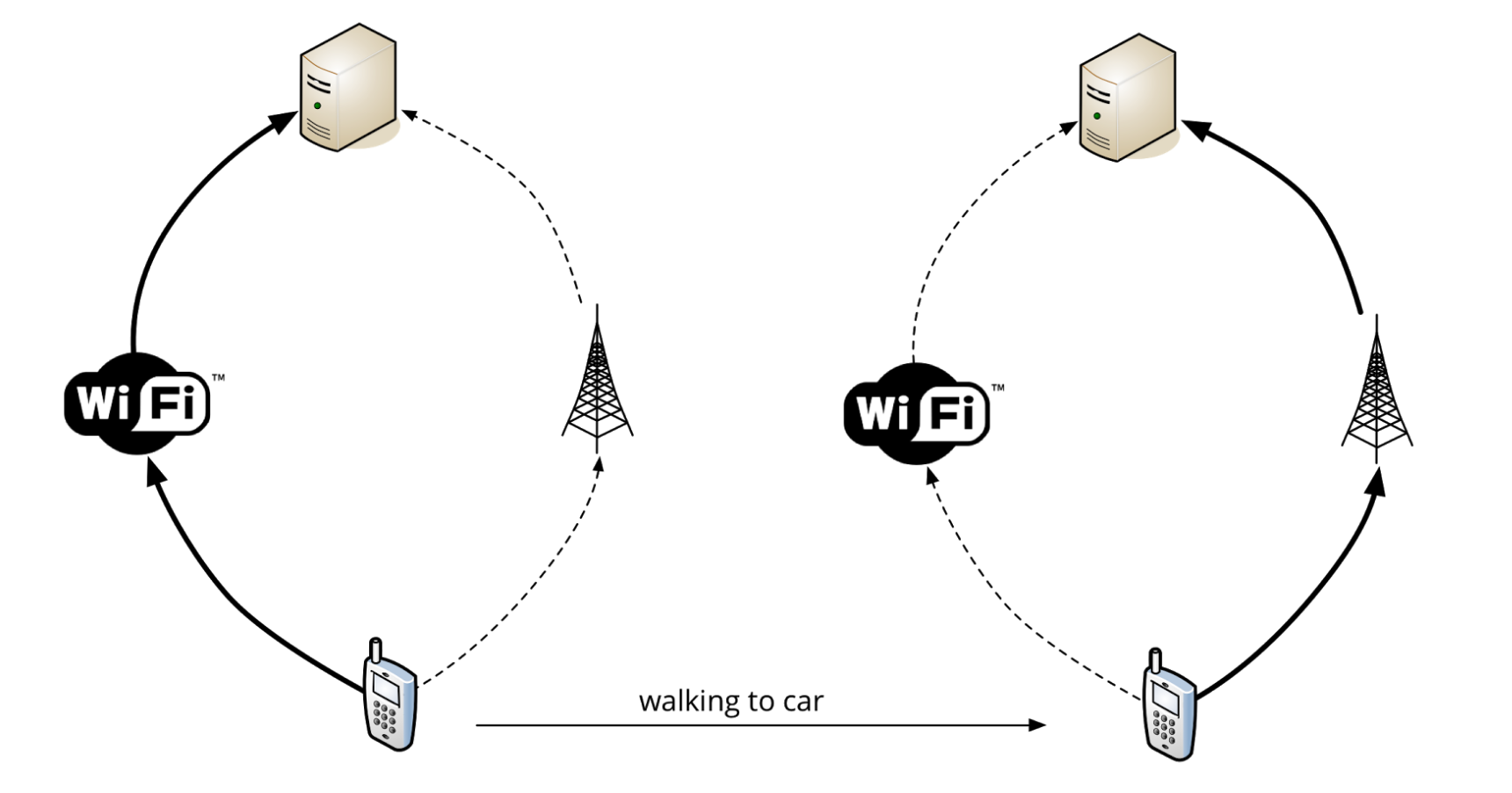

This is why keeping a stable connection on a mobile device is so hard, because you may be constantly switching between WiFi and 3G/LTE.

(Source: QUIC: next generation multiplexed transport over UDP)

With QUIC, since it's now using UDP, there are no quadruplets.

QUIC has implemented its own identifier for unique connections called the Connection UUID. It's possible to go from WiFi to LTE and still keep your Connection UUID, so no need to renegotiate the connection or TLS. Your previous connection is still valid.

This works the same way as the Mosh Shell, keeping SSH connections alive over UDP for a better roaming & mobile experience.

This also opens the doors to using multiple sources to fetch content. If the Connection UUID can be shared over a WiFi and cellular connection, it's in theory possible to use both media to download content. You're effectively streaming or downloading content in parallel, using every available interface you have.

While still theoretical, UDP allows for such innovation.

The QUIC protocol in action

The Chrome browser has had (experimental) support for QUIC since 2014. If you want test QUIC, you can enable the protocol in Chrome. Practically, you can only test the QUIC protocol against Google services.The biggest benefit Google has is the combination of owning both the browser and the server marketshare. By enabling QUIC on both the client (Chrome) and the server (Google services like YouTube, Google.com), they can run large-scale tests of new protocols in production.

There's a convenient Chrome plugin that can show the HTTP/2 and QUIC protocol as an icon in your browser: HTTP/2 and SPDY indicator.

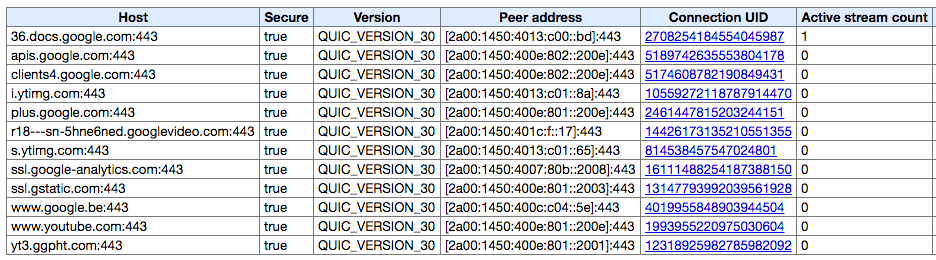

You can see how QUIC is being used by opening the

chrome://net-internals/#quic tab right now (you'll also notice the Connection UUID mentioned earlier).

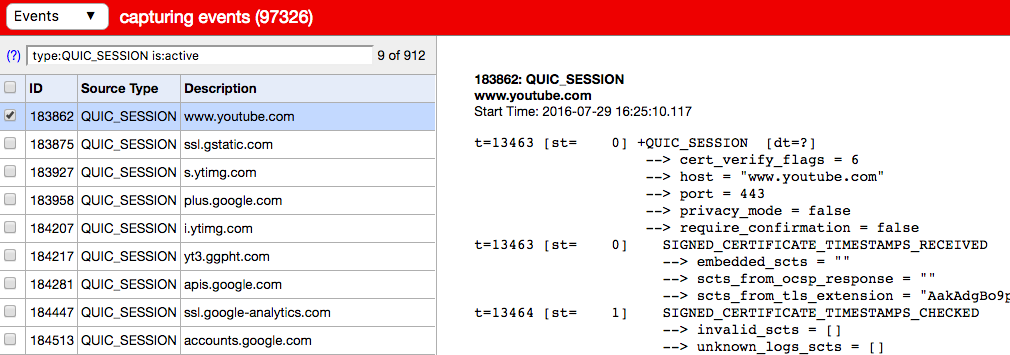

If you're interested in the low-level details, you can even see all the live connections and get individual per-packet captures:

chrome://net-internals/#events&q=type:QUIC_SESSION%20is:active.

Similar to how you can see the internals of a SDPY or HTTP/2 connection.

Won't someone think of the firewall?

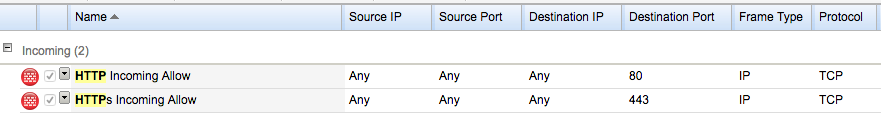

If you're a sysadmin or network engineer, you probably gave a little shrug at the beginning when I mentioned QUIC being UDP instead of TCP. You've probably got a good reason for that, too.For instance, when we at Nucleus Hosting configure a firewall for a webserver, those firewall rules look like these.

Take special note of the protocol column: TCP.

Our firewall isn't very different from the one deployed by thousands of other sysadmins. At this time, there's no reason for a webserver to allow anything other than

80/TCP or 443/TCP. TCP only. No UDP.Well, if we want to allow the QUIC protocol, we will need to allow

443/UDP too.For servers, this means opening incoming

443/UDP to the webserver. For clients, it means allowing outgoing 443/UDP to the internet.In large enterprises, I can see this be an issue. Getting it past security to allow UDP on a normally TCP-only port sounds fishy.

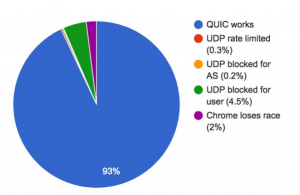

I would've actually thought this to be a major problem in terms of connectivity, but as Google has done the experiments -- this turns out to not be the case.

(Source: QUIC Deployment Experience @Google)

Those numbers were given at a recent HTTP workshop in Sweden. A couple of key-pointers;

- Since QUIC is only supported on Google Services now, the server-side firewalling is probably OK.

- These numbers are client-side only: they show how many clients are allowed to do UDP over port 443.

- QUIC can be disabled in Chrome for compliance reasons. I bet there are a lot of enterprises that have disabled QUIC so those connections aren't even attempted.

The advantage of doing things encrypted-only is that Deep Packet Inspection middleware (aka: intrusion prevention systems) can't decrypt the TLS traffic and modify the protocol, they see binary data over the fire and will -- hopefully -- just let it go through.

Running QUIC server-side

Right now, the only webserver that can get you QUIC is Caddy since version 0.9.Both client-side and server-side support is considered experimental, so it's up to you to run it.

Since no one has QUIC support enabled by default in the client, you're probably still safe to run it and enable QUIC in your own browser(s). (Update: since Chrome 52, everyone has QUIC enabled by default, even to non-whitelisted domains)

To help debug QUIC I hope curl will implement it soon, there certainly is interest.

Performance benefits of QUIC

In a 2015 blogpost Google has shared several results from the QUIC implementation.As a result, QUIC outshines TCP under poor network conditions, shaving a full second off the Google Search page load time for the slowest 1% of connections.The YouTube statistics are especially interesting. If these kinds of improvements are possible, we'll see a quick adoption in video streaming services like Vimeo or "adult streaming services".

These benefits are even more apparent for video services like YouTube. Users report 30% fewer rebuffers when watching videos over QUIC.

A QUIC update on Google’s experimental transport (2015)

Conclusion

I find the QUIC protocol to be truly fascinating!The amount of work that has gone into it, the fact that it's already running for the biggest websites available and that it's working blow my mind.

I can't wait see the QUIC spec become final and implemented in other browsers and webservers!

Update: comment from Jim Roskind, designer of QUIC

Jim Roskind was kind enough to leave a comment on this blog (see below) that deserves emphasising.Having spent years on the research, design and deployment of QUIC, I can add some insight. Your comment about UDP ports being blocked was exactly my conjecture when we were experimenting with QUIC’s (UDP) viability (before spending time on the detailed design and architecture). My conjecture was that the reason we could only get 93% reachability was because enterprise customers were commonly blocking UDP (perchance other than what was needed for DNS).Thanks Jim for the feedback, it's amazing to see the original author of the QUIC protocol respond!

If you recall that historically, enterprise customers routinely blocked TCP port 80 "to prevent employees from wasting their time surfing," then you know that overly conservative security does happen (and usability drives changes!). As it becomes commonly known that allowing UDP:443 to egress will provide better user experience (i.e., employees can get their job done faster, and with less wasted bandwidth), then I expect that usability will once again trump security ... and the UDP:443 port will be open in most enterprise scenarios.

... also ... your headline using the words “TCP/2” may well IMO be on target. I expect that the rate of evolution of QUIC congestion avoidance will allow QUIC to track the advances (new hardware deployment? new cell tower protocols? etc.) of the internet much faster than TCP.

As a result, I expect QUIC to largely displace TCP, even as QUIC provides any/all technology suggestions for incorporation into TCP. TCP is routinely implemented in the kernel, which makes evolutionary steps take 5-15 years (including market penetration!… not to mention battles with middle-boxes), while QUIC can evolve in the course of weeks or months.

-- Jim (QUIC Architect)

Further reading

If you're looking for more information, have a look at these resources:- QUIC: Next generation multiplexed transport over UDP

- QUIC Deployment experiences @ Google

- Design and Rationale of QUIC

- IETF Draft: QUIC: A UDP-Based Secure and Reliable Transport for HTTP/2

-- link https://ma.ttias.be/googles-quic-protocol-moving-web-tcp-udp/

Nota: foi copiado na integra porque o autor explicou muito bem o assunto. Parabens ao autor.

0 comentários:

Postar um comentário